Researchers Develop Gravity-Defying Robotic Hand With a Human-Like Touch

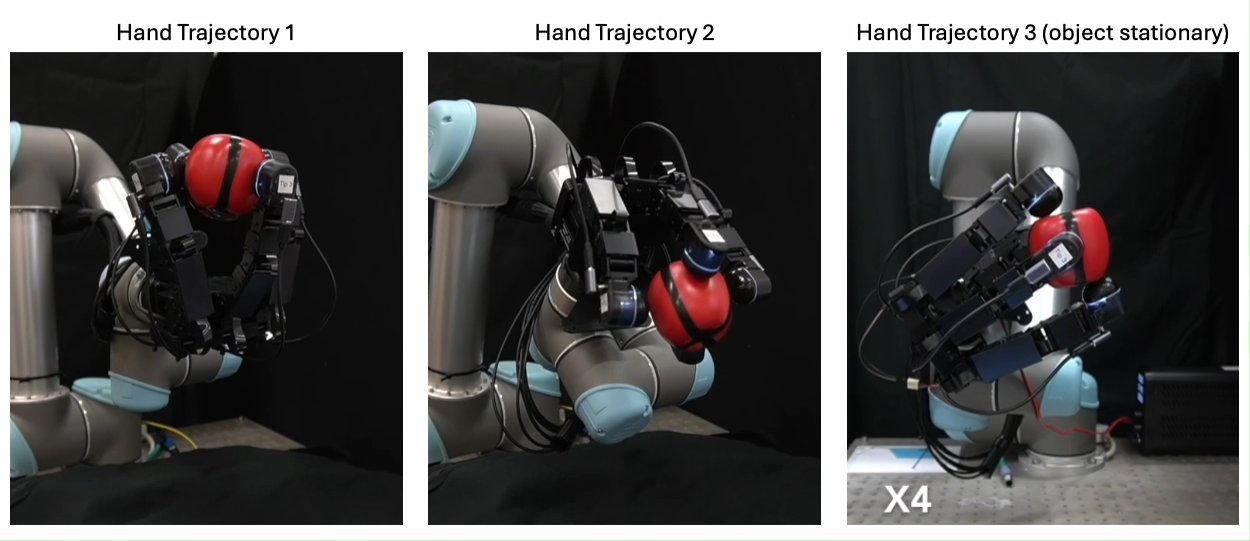

University of Bristol researchers successfully developed a four-finger robot hand with tactile fingertips. The hand is capable of rotating objects in all directions while maintaining its grasp.

A team of researchers from the University of Bristol developed a highly tactile, four-fingered robotic hand that can manipulate objects in any orientation. The research will have potential use in pick-and-place applications, as well as more complex tasks as researchers further develop the hand's dexterity.

The four-fingered robotic hand that can manipulate objects in any direction independent of gravity direction. Image used courtesy of the University of Bristol

Robotic Manipulation Technologies

The robotic grasping and manipulation field began its evolutionary journey just before the 1990s, including seminal work focusing on analytical approaches from a grasping perspective and two-dimensional object modeling. Data-driven methods, grasp-planning, and feature-centered grasp synthesis moved the field forward in necessary incremental steps, and three-dimensional sensing emerged in 2009 courtesy of the availability of depth sensors.

In early 2010, robotic grasping and manipulation became contact-centered. Multi-fingered end-effectors introduced new, more dexterous grasping capabilities that moved away from just pick-and-place applications. The next prominent evolutionary step was the arrival of generative models, deep learning, and the integration of human-robot interaction with deep interactive learning between 2010 and 2020. Now, the integration of biomimetic tactile sensing—that mimics the complex sensing features that lend to the sensitivity of human touch—is required to develop bots for human-like handling and manipulation of materials.

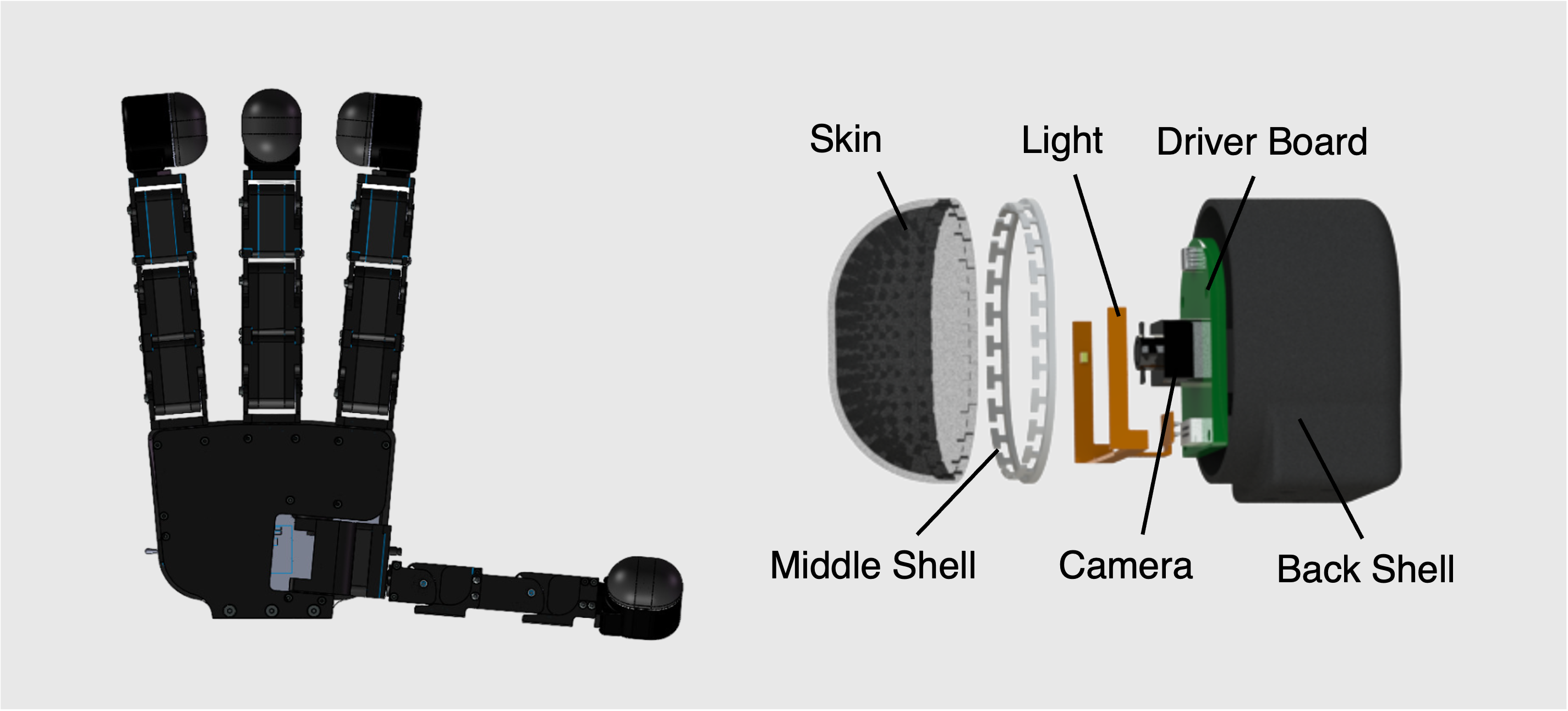

A look inside the tactile fingertip sensors that provide feedback to the robot hand. Image used courtesy of the University of Bristol

Robotic Tactile Sensing

Researchers from the University of Bristol, led by Professor of Robotics and AI Nathan Lepora, have developed an innovative tactile system that mimics the internal sensing mechanics of human skin. The four-fingered robotic hand incorporates a 3D-printed mesh of papillae with a pin-like form under the skin. When referring to the human body, papillae describe small bud-like protrusions on the tongue that contain the taste buds. The benefit of using 3D printing for the mesh design is the flexibility to combine both hard and soft materials that can replicate biological structures.

In further experimentation, the collaborative team tested the tactile system upside down. This took the robot hand some getting used to, and it dropped the object it was handling. Following more targeted training, the hand was able to manipulate the object upside down without dropping it.

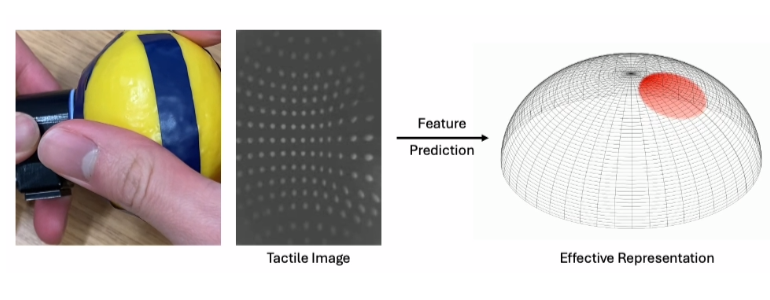

A visualization showing the contact between an object and the tactile fingertip. A tactile image captures the deformation of the surface, with the dome representing the fingertip. The center of the shaded zone on the dome denotes the contact position, while its size indicates the force. Image used courtesy of the University of Bristol

Bridging the Sim-to-Real Gap

The researchers devised the AnyRotate system for in-hand manipulation of objects in any direction (multiple axes) and independent of gravity direction. The system relies on training observational models on complex tactile data, made possible through the use of a tactile center on a level surface stimulus fitted beneath a force/torque sensor. This setup allows for the collection of complex data consisting of tactile images and respective contact forces and robot poses.

The researchers trained the convolutional neural network (CNN) with the contact data for use in the simulation. A CNN is a deep learning framework that interprets and analyzes visual data. CNNs have numerous fundamental qualities that contribute to their effectiveness. Convolutional layers build feature maps by applying filters to the image being processed and recognizing features such as corners, textures, and motifs.

According to researchers, the next evolutionary step for the four-fingered robot hand is to transition from simpler rotation and pick-and-place activities to dextrous manual tasks such as assembling Lego pieces.

Facebook

Facebook Google

Google GitHub

GitHub Linkedin

Linkedin