Creating a More Sensible Industrial Robot: The Sense of Touch

Using advanced sensing technology, robotic skin allows grippers and manipulators to “feel” and handle objects without damaging them. While emulating human touch is still in its infancy, it does show promise for future applications.

For years, machine vision has been one of the primary methods for robots to collect data about objects they are handling. Millions, if not billions of dollars have been invested in the sharpest cameras with the highest resolution, the fastest imaging software, and the smarted artificial intelligence (AI) routines for detecting objects and plotting movement routes to avoid collisions or damage to the objects being moved.

Figure 1. Touch can be used to determine the surface texture, stiffness, hardness, temperature, and weight of solid objects. Image used courtesy of Canva

Simulating Human Touch

Take a step back—the purpose of robots in the workplace is to replace humans, particularly in dangerous, repetitive, boring, or precise jobs. Human workers use more than just their sense of sight to manipulate objects. An important component to properly handling objects is a worker’s sense of touch.

Touch can be used to determine the surface texture, stiffness, hardness, temperature, and weight of solid objects. It can also, to some degree, determine the presence of contamination or moisture, ripeness of fruits and vegetables, and other more specialized properties.

In humans, the brain uses all of this information to make control decisions on how to pick up, manipulate, catch, or handle an object. The gripping force and location of these objects are naturally developed in childhood. In gym class, the sense of touch provides relevant information to a kid’s brain that allows them to catch a foam dodgeball and a similarly-sized medicine ball, even though the latter is much heavier. It also allows them to tightly grip and pitch a baseball, yet delicately pick a tomato from a vine without crushing it. Most importantly, these actions are performed, at least at the rudimentary level, without a lot of conscious thought.

More capabilities can be taught to humans with practice. The first time a welding student dons their protective gloves, they lose some sense of touch. They awkwardly handle the welding equipment until they have practiced and trained their brain to feel through the gloves. Even so, an experienced welder cannot pick up a dime off the ground while wearing their welding gloves.

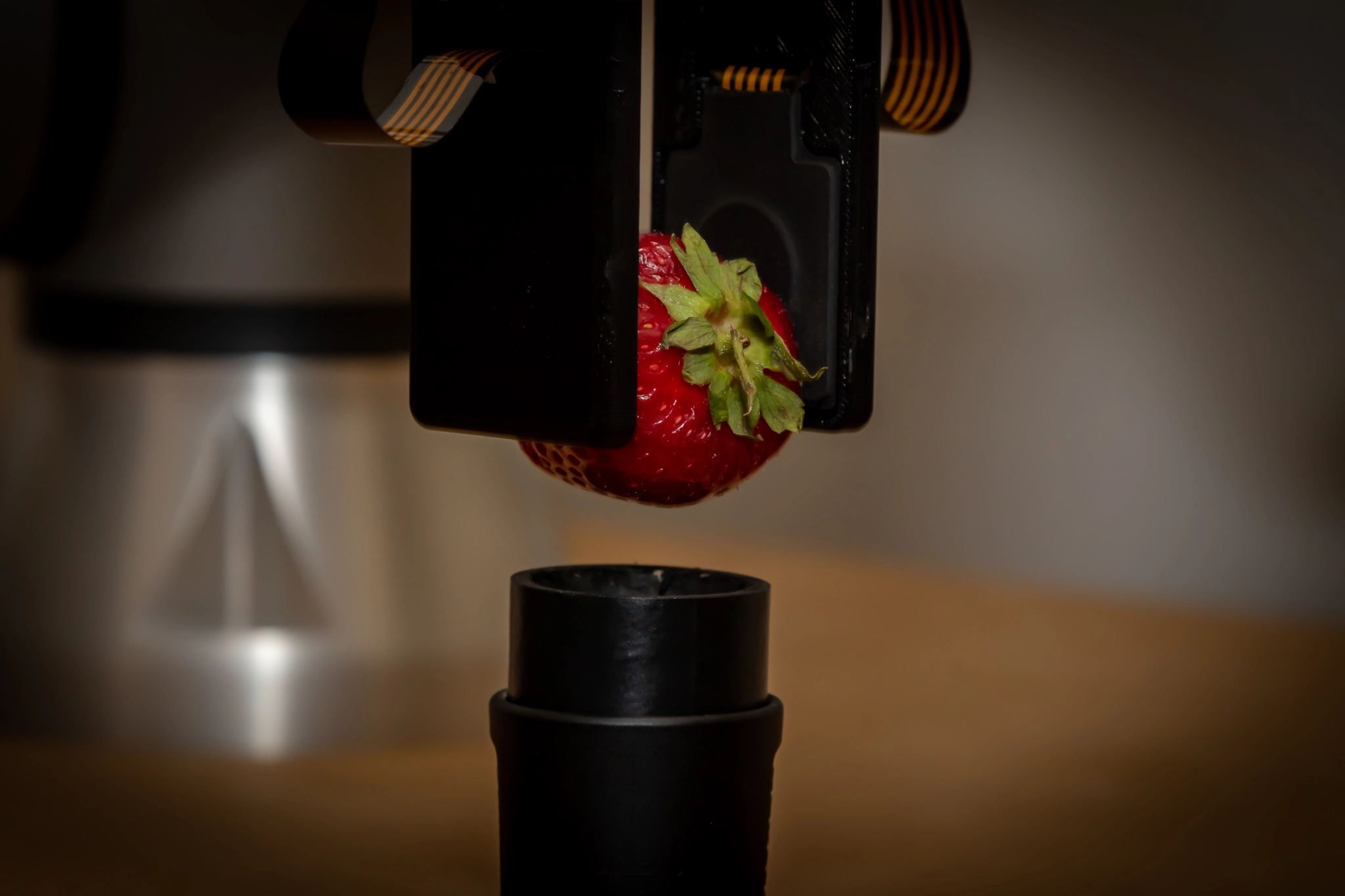

Figure 2. Simulating the sense of touch in robots requires sensors capable of detecting all of the subtleties that the human hand can detect with enough processing power to handle this incoming data. Image used courtesy of Touchlab

Robotic Tactile Sense

If humans are so great at picking up and manipulating things, why use robots at all? While human touch and brain processing power are great, the human body is naturally weaker in some respects. Humans have only so many joints, so much strength, and can only withstand certain conditions. Robotic manipulators, on the other hand, can be designed with an infinite combination of joints to handle all ranges of motion. Furthermore, robots can withstand hotter and colder temperatures, lift heavier objects, and move much faster or more precisely than humans.

In order to simulate the sense of touch in robots, there must be sensors capable of detecting all of the subtleties that the human hand can detect and there must be enough processing power to handle this incoming data. From there, the processor can make control decisions on how to change the grip position or pressure to handle the object.

Haptic Perception with Pressure Sensors

The first step to simulating touch is to develop pressure sensors that behave much like the nerve endings in the human body. If a blindfolded person receives a pinprick on their finger, they know exactly where the point is impacting their skin. This is because the nervous system has many endings, providing fine resolution of pressure across each fingertip. Because of this fine resolution, they can pick up a thumbtack safely by detecting where the point is located and only apply enough pressure to hold the tack without damaging the skin.

One of the big challenges in developing this type of pressure sensor for a robot is matching that resolution. There have been great strides in this field, thanks in part to touch-screen technologies that have become prevalent on all smart devices. While the mechanism is a little different, compare first-generation touchscreens to the latest phone and it is apparent that pressure resolution is getting smaller.

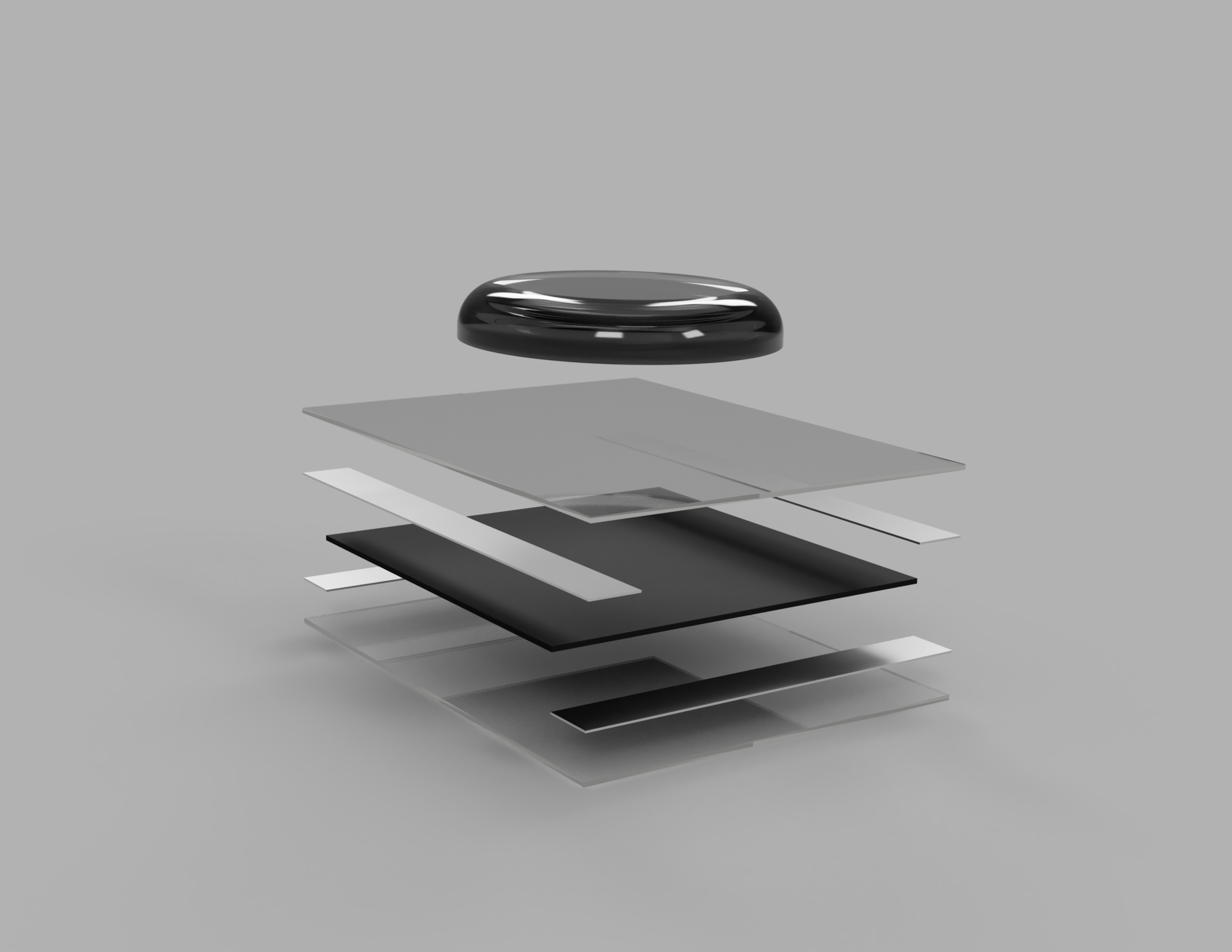

Outfits, such as Touchlab, are developing this sensor technology. They have several multi-layer sensors that can determine pressure and location with only four wires as an output. Their sensors are designed to mimic human skin, with enough resolution and software control so that robots can roll pens on a table, feel texture, and determine pressure. Backed with software, these sensors can perform tasks such as keeping pressure below the pain threshold for humans, which may benefit the ergonomics and labor health and safety markets.

Figure 3. Multilayer, compact pressure sensor from Touchlab. Image used courtesy of Touchlab

Potential Robotic Applications

Once these pressure sensors and software routines are fully developed, they could be deployed across a number of industries.

In agriculture, robot harvesters, egg turners, dairy cow milking machines, and other such machines will have more delicate, yet accurate grip, preventing loss on fruits, vegetables, and eggs, and perhaps minimize the pain on livestock during such operations.

In medicine, some patient examinations could be performed robotically, freeing up time for nurses to perform other tasks. This will also minimize exposure to disease, as a robot can be sterilized more easily than a nurse rushing between patient rooms.

Exoskeletons are another potential market for these sensors. For heavy materials handling, workers can don an exoskeleton that performs much of the mechanical holding and lifting operations, though movement is driven by the human. With these skin-like pressure sensors, the exoskeleton can potentially prevent fatigue by finding pressure points and places where excess stress is being placed on an individual’s body. This will be important, as the exoskeletons will likely only be available in certain sizes, and humans come in a spectrum of sizes, meaning there will never be a perfect fit.

Figure 4. Exoskeleton on a baggage handler. Image used courtesy of German Bionic

Future of Haptics

The key to replicating human touch relies on both the advanced sensing technology and the smartest algorithms to decide what to do with all of the data from the pressure sensors. While these technologies are still in their infancy, they do show promise for future applications.

It is impossible to model all that the human brain and nervous system can do, but with some creative engineering, perhaps enough can be duplicated and improved upon so that robots can perform repetitive, dangerous, accurate, or boring chores in service to their human masters.

Facebook

Facebook Google

Google GitHub

GitHub Linkedin

Linkedin